Content Library

Explore a diverse collection of guides, product briefs, e-books, webinars, and videos, all crafted to enhance your understanding and skills in Java programming.

Feb 2, 2026

The 2025 State of Container Security

Jan 29, 2026

JDBC Connection Pools in Microservices. Why They Break Down (and What to Do Instead)

In this livestream, Catherine is joined by Rogerio Robetti, the founder of Open J Proxy, to discuss why traditional JDBC connection pools break down when teams migrate to microservices, and what is a more efficient and reliable approach to organizing database access with microservice architecture.

Jan 27, 2026

Sizing JDBC Connection Pools for Real Production Load

Many production outages start with connection pool exhaustion. Your app waits seconds for connections while queries take milliseconds; yet, most teams run default settings that collapse under load. This video shows how to configure connection pools that survive real production traffic: sizing based on database limits and thread counts, setting timeouts that prevent cascading failures, and implementing an open source database proxy Open J Proxy for centralized connection management with virtual connection handles, client-side load balancing, and slow query segregation. For senior Java developers, DevOps engineers, and architects who need database performance that holds under pressure.

Jan 22, 2026

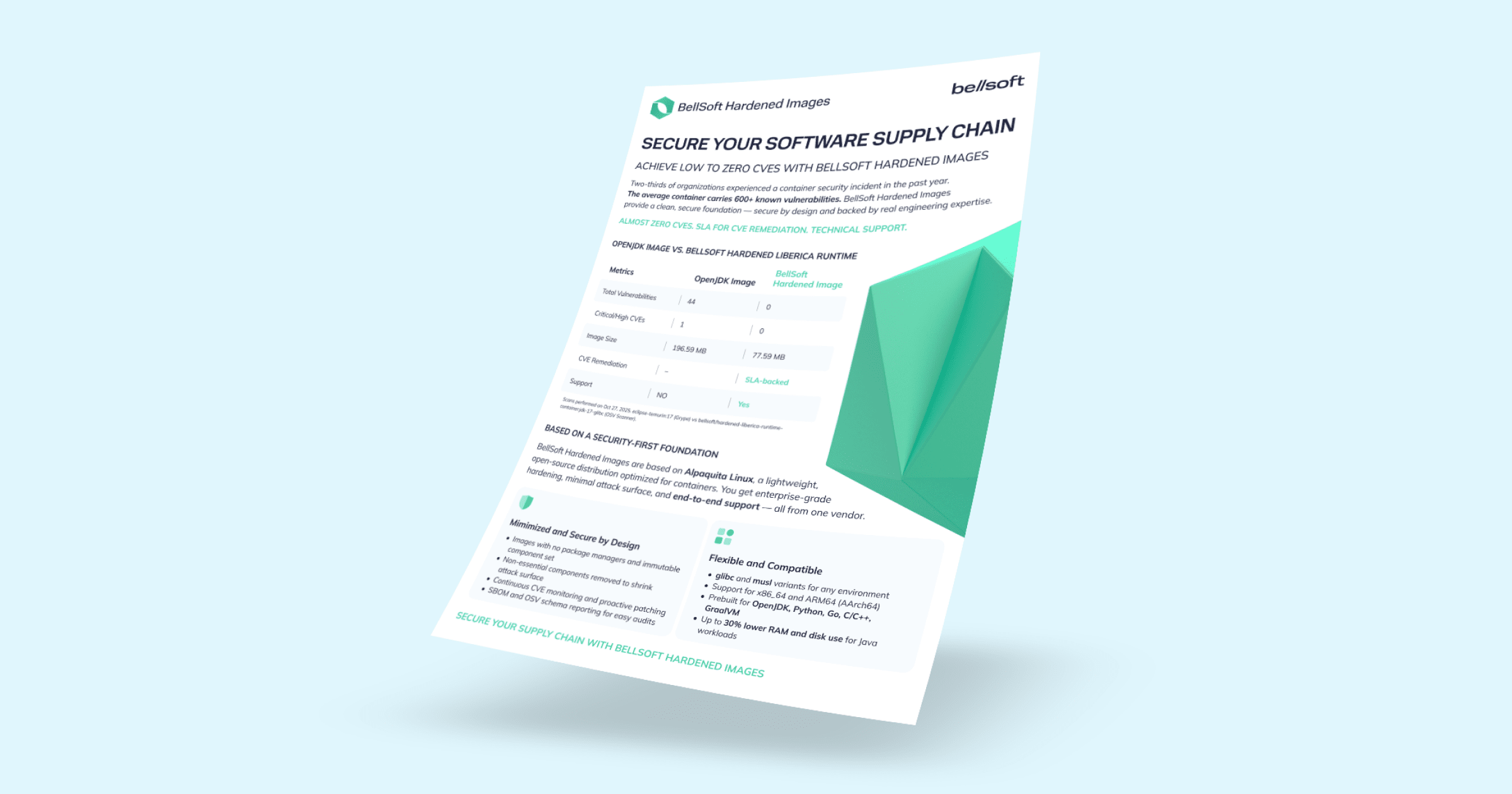

Bellsoft Hardened Images: Product Brief

Jan 20, 2026

JDBC vs ORM vs jOOQ: Choose the Right Java Database Tool

Still unsure what is the difference between JPA, Hibernate, JDBC, or jOOQ and when to use which? This video clarifies the entire Java database access stack with real, production-oriented examples. We start at the foundation, which is JDBC, a low-level API every other tool eventually relies on for database communication. Then, we go through the ORM concept, JPA as a specification of ORM, Hibernate as the implementation and extension of JPA, and Blaze Persistence as a powerful upgrade to JPA Criteria API. From there, we take a different path with jOOQ: a database-first, SQL-centric approach that provides type-safe queries and catches many SQL errors at compile time instead of runtime. You’ll see when raw JDBC makes sense for small, focused services, when Hibernate fits CRUD-heavy domains, and when jOOQ excels at complex reporting and analytics. We discuss real performance pitfalls such as N+1 queries and lazy loading, and show practical combination strategies like “JPA for CRUD, jOOQ for reports.” The goal is to equip you with clarity so that you can make informed architectural decisions based on domain complexity, query patterns, and long-term maintainability.

Jan 13, 2026

Hibernate: Ditch or Double Down? When ORM Isn't Enough

Every Java team debates Hibernate at some point: productivity champion or performance liability? Both are right. This video shows you when to rely on Hibernate's ORM magic and when to drop down to SQL. We walk through production scenarios: domain models with many-to-many relations where Hibernate excels, analytical reports with window functions where JDBC dominates, and hybrid architectures that use both in the same Spring Boot codebase. You'll see real code examples: the N+1 query trap that kills performance, complex window functions and anti-joins that Hibernate can't handle, equals/hashCode pitfalls with lazy loading, and practical two-level caching strategies. We also explore how Hibernate works under the hood—translating HQL to database-specific SQL dialects, managing sessions and transactions through JDBC, implementing JPA specifications. The strategic insight: modern applications need both ORM convenience for transactional business logic and SQL precision for data-intensive analytics. Use Hibernate for CRUD and relationship management. Use SQL where ORM abstractions leak or performance demands direct control.

Dec 30, 2025

Java in 2025: LTS Release, AI on JVM, Framework Modernization

Java in 2025 isn't about headline features, it's about how production systems changed under the hood. While release notes focus on individual JEPs, the real story is how the platform, frameworks, and tooling evolved to improve stability, performance, and long-term maintainability. In this video, we look at Java from a production perspective. What does Java 25 LTS mean for teams planning to upgrade? How are memory efficiency, startup time, and observability getting better? Why do changes like Scoped Values and AOT optimizations matter beyond benchmarks? We also cover the broader ecosystem: Spring Boot 4 and Framework 7, AI on the JVM with Spring AI and LangChain4j, Kotlin's growing role in backend systems, and tooling updates that make upgrades easier. Finally, we touch on container hardening and why runtime and supply-chain decisions matter just as much as language features.

Dec 24, 2025

I Solved Advent of Code 2025 in Kotlin: Here's How It Went

Every year, Advent of Code spawns thousands of solutions — but few engineers step back to see the bigger picture. This is a complete walkthrough of all 12 days from 2025, focused on engineering patterns rather than puzzle statements. We cover scalable techniques: interval math without brute force, dynamic programming, graph algorithms (JGraphT), geometry with Java AWT Polygon, and optimization problems that need constraint solvers like ojAlgo. You'll see how Java and Kotlin handle real constraints, how visualizations validate assumptions, and when to reach for libraries instead of writing everything from scratch. If you love puzzles, programming—or both—and maybe want to learn how to solve them on the JVM, this is for you.

Dec 18, 2025

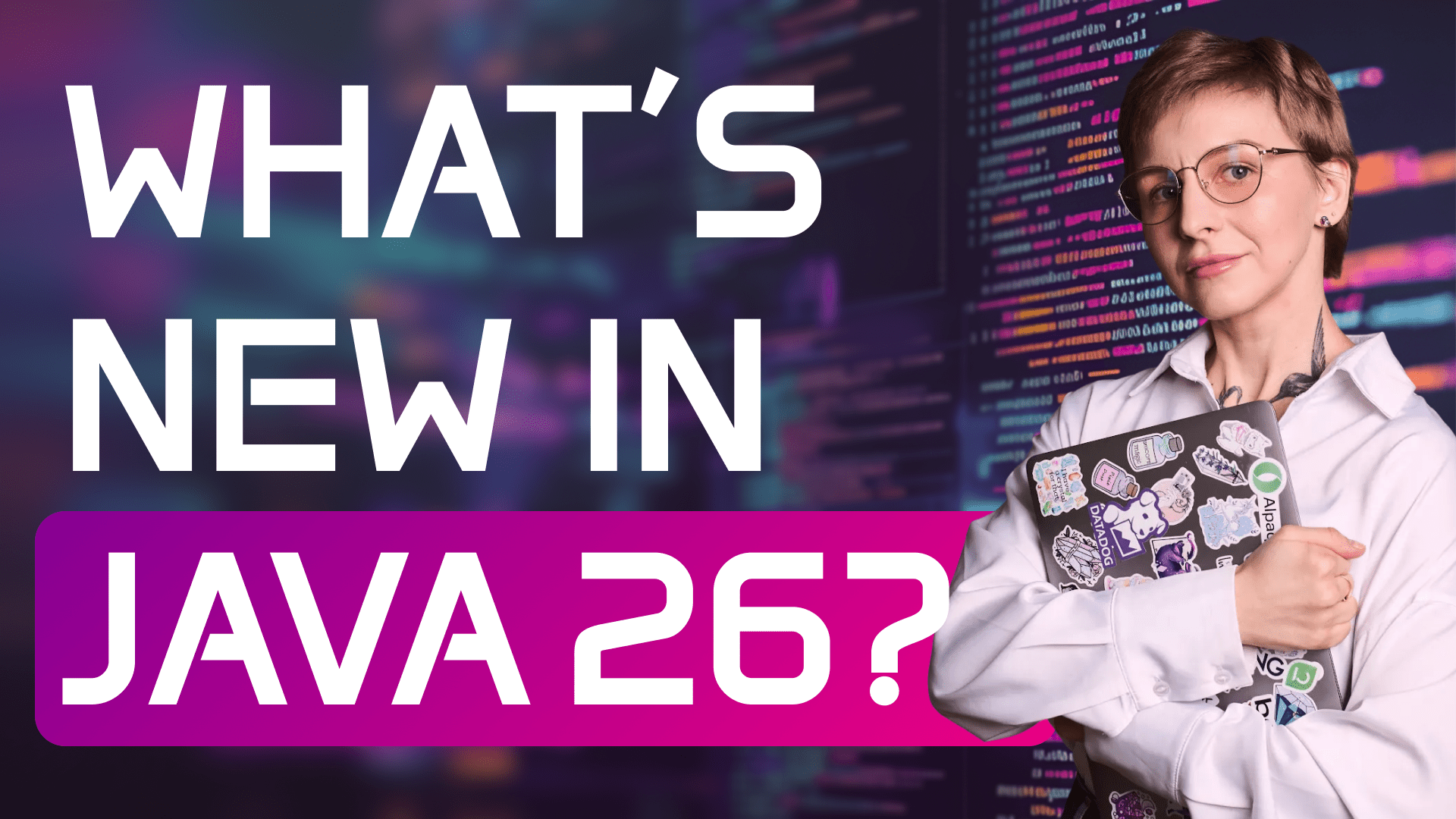

Java 26 Preview: New JEPs and What They Mean for You

Java 26 is the next feature release that brings features for enhanced performance, security, and developer experience. This video discusses the upcoming JDK 26 release, highlighting ten JEPs including JEP 500. JEP 500 focuses on preparing developers for future restrictions on mutating final fields in Java, emphasizing their role in maintaining immutable state. This is crucial for robust programming and understanding the nuances of mutable vs immutable data, especially concerning an immutable class in java. We also touch upon the broader implications for functional programming in Java.

Dec 12, 2025

Liberica JDK: White Paper

Dec 12, 2025

Liberica Native Image Kit: White Paper

Dec 12, 2025

Liberica JDK Perfomance Edition: White Paper

Dec 12, 2025

Liberica JDK 6&7: White Paper

Dec 12, 2025

Alpaquita Linux: White Paper

Dec 12, 2025

Will AI Replace Developers? A Vibe Coding Reality Check 2025

Can AI replace software engineers? ChatGPT, Copilot, and LLM-powered vibe coding tools promise to automate development—but after testing them against 17 years of production experience, the answer is more nuanced than the hype suggests. Full project generation produces over-engineered code that's hard to refactor. AI assistants excel at boilerplate but fail at business logic. MCP servers solve hallucination problems but create context overload. Meanwhile, DevOps automation actually works. This breakdown separates AI capabilities from marketing promises—essential for teams integrating LLMs and copilots without compromising code quality or architectural decisions.

Dec 12, 2025

JRush | Container Essentials: Fast Builds, Secure Images, Zero Vulnerabilities

Web-conference for Java developers focused on hands-on strategies for building high-performance containers, eliminating CVEs, and detecting security issues before production.

Dec 2, 2025

Build RAG System with Spring AI: No More AI Lies

Struggling to find answers in your own documentation? Your LLM is too. Hallucinations happen because models don’t know your data. RAG (Retrieval Augmented Generation) turns unstructured docs into searchable vector embeddings, so your LLM retrieves facts instead of inventing them. This tutorial walks through the full RAG workflow in Spring AI: document ingestion with TikaDocumentReader, embedding generation, vector storage (pgvector, Choma, Milvus, Oracle), and similarity-based retrieval. You’ll build two endpoints: one for uploading documents and one for answering questions strictly using your indexed data. When the system doesn’t know, it says so—no more confident nonsense. Designed for Java teams bringing AI into production systems where accuracy matters. You’ll learn the pattern, get the code, and deploy an LLM that finally stops hallucinating.

Nov 20, 2025

Hardened Container Images 101: What, Why, and How for DevSecOps [2025]

Why do production containers still ship with 600+ CVEs, package managers, and compilers? Most teams inherit bloated base images without ever checking what’s inside. Hardened container images fix this by removing unnecessary tools, enforcing immutability, and providing verifiable provenance. This tutorial breaks down the four pillars of hardened images—minimal base layers, low-to-zero CVEs, immutable runtimes, and SBOM-backed provenance—and shows how they compare to traditional and distroless images. You’ll also see practical examples: migrating from OpenJDK to hardened Liberica images with multi-stage builds, pinning images by digest, verifying signatures with Cosign, and integrating these checks into a production-ready CI/CD pipeline. Perfect for Java developers, DevOps engineers, and architects who want compliance-ready containers and dramatically fewer CVEs—without reinventing security every sprint.

Nov 14, 2025

Cloud Native Spring: Crafting the Perfect Java image for K8S

Nov 14, 2025

Spring AI: Streaming LLM Tokens with NDJSON in Spring Boot

Streaming LLM responses smoothly is harder than it looks. SSE often breaks LLM token spacing and words merge. This video shows how to fix it using Spring AI, WebFlux, and NDJSON. You’ll learn why Server-Sent Events can fail with modern LLM tokenizers, how NDJSON provides reliable token streaming, and how to build a production-ready pipeline with proper error handling and backpressure control. We cover configuring Spring AI ChatClient with Ollama, creating a reactive NDJSON endpoint, handling errors with onErrorResume, managing backpressure with limitRate, and consuming the NDJSON stream on a Vaadin frontend using WebClient. The result: stable, clean LLM streaming. Tested with Spring Boot 3.5.7, Java 25 (Liberica JDK), and Ollama both locally and in Docker.

Nov 6, 2025

Docker Container Image Security: 13 Best Practices

This video presents 13 practical recommendations for reducing your attack surface and detecting malicious activity more quickly. You’ll learn how to create simple, immutable, and deterministic images using multi-stage builds, distroless bases, and non-root users. We cover SBOM generation with Syft, provenance verification with Cosign, CVE scanning workflows, and secret management strategies. From choosing LTS base images like Alpaquita Linux to implementing host-level protections, these practices will help you confidently deliver secure containers. It’s ideal for Java developers, DevOps engineers, and architects building production-grade infrastructure.

Oct 31, 2025

Vaadin Tutorial: From Spring Boot to Beautiful UI Fast

In this guide, I’ll show you how to build a fully functional Java application with authentication, data tables, filters, and a custom cyberpunk theme using Vaadin.

Oct 23, 2025

Top 7 JavaFX Testing Mistakes You Need To Avoid!

Stop making these common JavaFX testing mistakes! No more random NullPointerExceptions or deadlocks — in this video, we’ll show you how to fix the 7 most frequent TestFX issues when testing JavaFX applications. Learn how to work with FX threads, integrate with Spring Boot, avoid event-queue race conditions, handle pixel-level test differences, set up headless continuous integration with Monocle, and properly separate business logic from UI tests.

Oct 16, 2025

All 7 Java Garbage Collectors Explained

In this complete guide to Java garbage collection, you will learn how the JVM memory model works, understand the differences between the Serial, Parallel, G1, ZGC, Shenandoah, CMS, and Epsilon collectors, and determine which garbage collector is best suited for your application's performance — from single-threaded programs to massive terabyte-scale heaps.

Be the first to know everything important about Java development

Subcribe to our newsletter

Read the industry news, receive solutions to your problems, and find the ways to save money.